Jack Fairhead

Add a review FollowOverview

-

Founded Date November 21, 2006

-

Sectors Healthcare

-

Posted Jobs 0

-

Viewed 25

Company Description

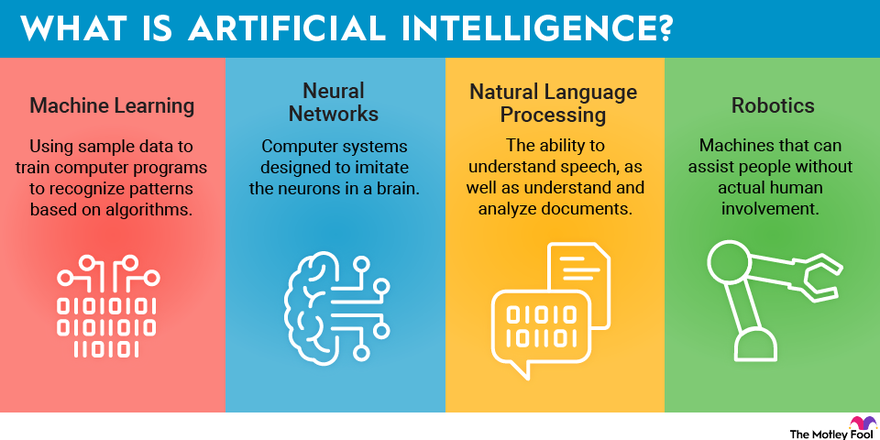

MIT Researchers Develop an Efficient Way to Train more Reliable AI Agents

Fields ranging from robotics to medication to political science are attempting to train AI systems to make significant decisions of all kinds. For instance, using an AI system to intelligently manage traffic in a busy city might help vehicle drivers reach their destinations much faster, while improving security or sustainability.

Unfortunately, teaching an AI system to make great choices is no easy task.

Reinforcement knowing designs, which underlie these AI decision-making systems, still frequently fail when faced with even small variations in the tasks they are trained to perform. In the case of traffic, a model may struggle to manage a set of intersections with various speed limitations, numbers of lanes, or traffic patterns.

To boost the reliability of reinforcement learning models for intricate jobs with variability, MIT scientists have introduced a more effective algorithm for training them.

The algorithm tactically selects the best tasks for training an AI agent so it can effectively carry out all tasks in a collection of associated tasks. When it comes to traffic signal control, each task could be one intersection in a job space that consists of all intersections in the city.

By focusing on a smaller sized number of crossways that contribute the most to the algorithm’s total efficiency, this approach maximizes efficiency while keeping the training cost low.

The researchers discovered that their strategy was between 5 and 50 times more effective than standard methods on a range of simulated tasks. This gain in performance helps the algorithm learn a better solution in a faster way, ultimately enhancing the performance of the AI agent.

“We had the ability to see unbelievable performance improvements, with a really basic algorithm, by thinking outside package. An algorithm that is not extremely complicated stands a much better chance of being embraced by the community because it is much easier to execute and simpler for others to understand,” says senior author Cathy Wu, the Thomas D. and Virginia W. Cabot Career Development Associate Professor in Civil and Environmental Engineering (CEE) and the Institute for Data, Systems, and Society (IDSS), and a member of the Laboratory for Information and Decision Systems (LIDS).

She is joined on the paper by lead author Jung-Hoon Cho, a CEE college student; Vindula Jayawardana, a graduate trainee in the Department of Electrical Engineering and Computer Technology (EECS); and Sirui Li, an IDSS college student. The research will be presented at the Conference on Neural Information Processing Systems.

Finding a happy medium

To train an algorithm to manage traffic signal at many crossways in a city, an engineer would usually select between 2 primary methods. She can train one algorithm for each intersection individually, utilizing only that crossway’s data, or train a bigger algorithm utilizing information from all intersections and then apply it to each one.

But each method features its share of downsides. Training a separate algorithm for each task (such as an offered crossway) is a time-consuming process that requires an enormous quantity of information and calculation, while training one algorithm for all tasks often results in substandard efficiency.

Wu and her collaborators sought a sweet area in between these two methods.

For their technique, they select a subset of tasks and train one algorithm for each task independently. Importantly, they strategically choose individual tasks which are most likely to improve the algorithm’s general efficiency on all tasks.

They utilize a common technique from the reinforcement learning field called zero-shot transfer knowing, in which an already trained design is used to a brand-new task without being further trained. With transfer learning, the model often carries out incredibly well on the new neighbor job.

“We understand it would be ideal to train on all the jobs, however we wondered if we could get away with training on a subset of those jobs, use the result to all the jobs, and still see a performance boost,” Wu says.

To identify which tasks they must pick to maximize anticipated efficiency, the scientists established an algorithm called Model-Based Transfer Learning (MBTL).

The MBTL algorithm has 2 pieces. For one, it designs how well each algorithm would perform if it were trained independently on one task. Then it designs just how much each algorithm’s performance would deteriorate if it were transferred to each other task, a principle called generalization performance.

Explicitly modeling generalization performance permits MBTL to approximate the worth of training on a brand-new job.

MBTL does this sequentially, picking the task which causes the greatest performance gain initially, then selecting extra jobs that supply the biggest subsequent marginal improvements to general efficiency.

Since MBTL only focuses on the most appealing jobs, it can significantly improve the performance of the training process.

Reducing training costs

When the researchers tested this technique on simulated tasks, consisting of managing traffic signals, handling real-time speed advisories, and carrying out numerous traditional control jobs, it was 5 to 50 times more effective than other approaches.

This suggests they might come to the exact same solution by training on far less data. For circumstances, with a 50x efficiency increase, the MBTL algorithm might train on just 2 jobs and accomplish the same performance as a standard technique which utilizes data from 100 jobs.

“From the viewpoint of the two main approaches, that indicates data from the other 98 jobs was not required or that training on all 100 tasks is confusing to the algorithm, so the performance ends up even worse than ours,” Wu says.

With MBTL, adding even a small quantity of additional training time might cause far better efficiency.

In the future, the researchers prepare to algorithms that can encompass more complicated problems, such as high-dimensional job areas. They are also interested in applying their technique to real-world issues, specifically in next-generation movement systems.