Saiwaijyuku

Add a review FollowOverview

-

Founded Date August 29, 1926

-

Sectors Healthcare

-

Posted Jobs 0

-

Viewed 26

Company Description

AI is ‘an Energy Hog,’ however DeepSeek could Change That

Science/

Environment/

Climate.

AI is ‘an energy hog,’ however DeepSeek might change that

DeepSeek claims to utilize far less energy than its rivals, however there are still big concerns about what that suggests for the environment.

by Justine Calma

DeepSeek surprised everyone last month with the claim that its AI model utilizes approximately one-tenth the amount of computing power as Meta’s Llama 3.1 design, overthrowing a whole of just how much energy and resources it’ll take to develop synthetic intelligence.

Taken at face value, that claim could have incredible ramifications for the environmental impact of AI. Tech giants are rushing to construct out huge AI data centers, with plans for some to use as much electrical power as small cities. Generating that much electricity produces contamination, raising worries about how the physical infrastructure undergirding new generative AI tools might worsen environment modification and worsen air quality.

Reducing how much energy it requires to train and run generative AI models could ease much of that stress. But it’s still prematurely to gauge whether DeepSeek will be a game-changer when it comes to AI‘s environmental footprint. Much will depend on how other significant players react to the Chinese start-up’s breakthroughs, particularly considering strategies to build brand-new information centers.

” There’s an option in the matter.”

” It simply reveals that AI doesn’t have to be an energy hog,” says Madalsa Singh, a postdoctoral research fellow at the University of California, Santa Barbara who studies energy systems. “There’s an option in the matter.”

The fuss around DeepSeek started with the release of its V3 model in December, which only cost $5.6 million for its last training run and 2.78 million GPU hours to train on Nvidia’s older H800 chips, according to a technical report from the company. For contrast, Meta’s Llama 3.1 405B design – regardless of utilizing newer, more effective H100 chips – took about 30.8 million GPU hours to train. (We don’t understand exact costs, but estimates for Llama 3.1 405B have been around $60 million and between $100 million and $1 billion for similar models.)

Then DeepSeek launched its R1 model last week, which endeavor capitalist Marc Andreessen called “a profound gift to the world.” The business’s AI assistant quickly shot to the top of Apple’s and Google’s app shops. And on Monday, it sent rivals’ stock costs into a nosedive on the assumption DeepSeek had the ability to produce an option to Llama, Gemini, and ChatGPT for a portion of the budget plan. Nvidia, whose chips allow all these innovations, saw its stock rate plunge on news that DeepSeek’s V3 just required 2,000 chips to train, compared to the 16,000 chips or more required by its rivals.

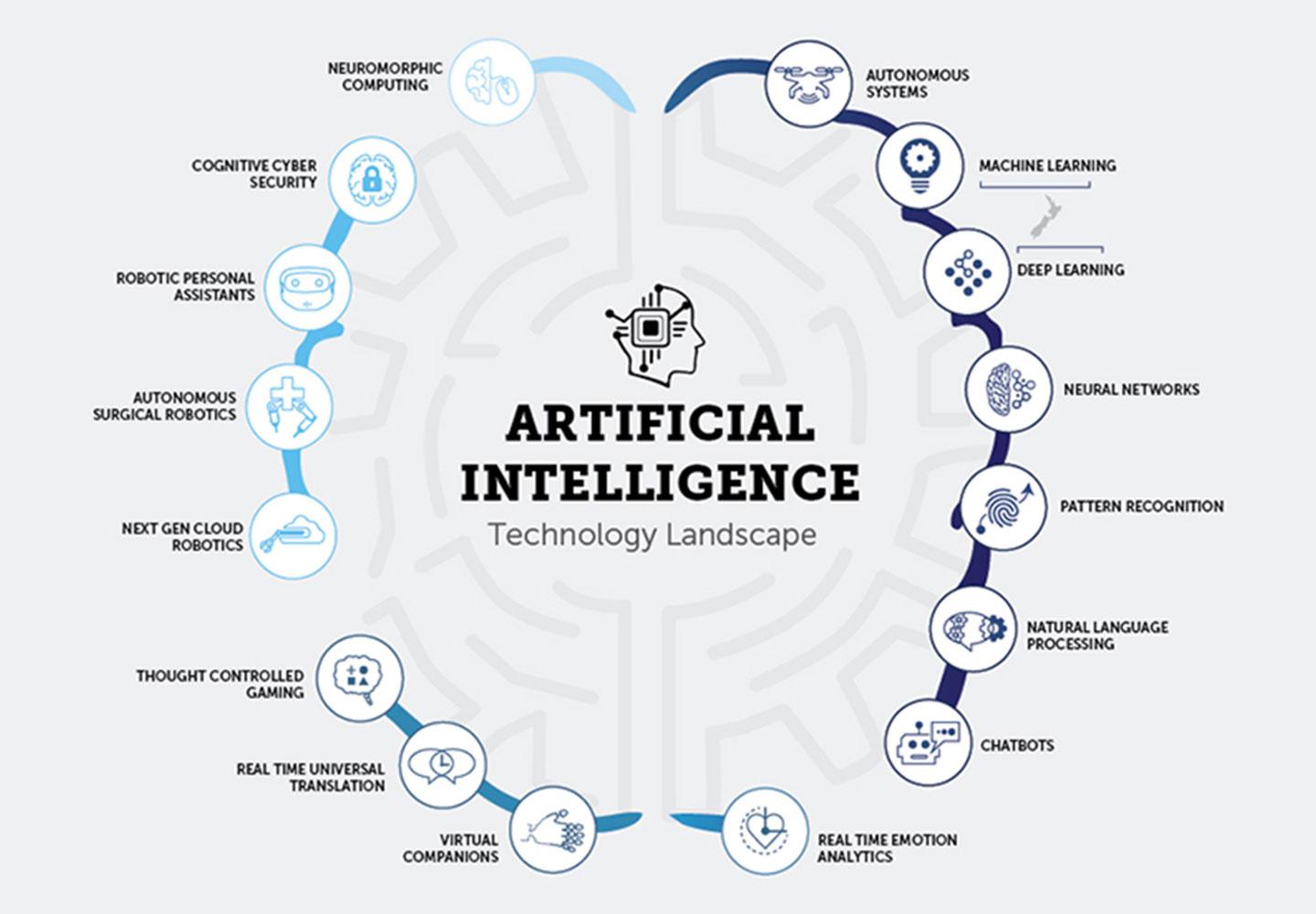

DeepSeek says it had the ability to reduce just how much electricity it consumes by utilizing more effective training techniques. In technical terms, it uses an auxiliary-loss-free method. Singh states it comes down to being more selective with which parts of the design are trained; you do not need to train the whole design at the very same time. If you think of the AI model as a big consumer service firm with many specialists, Singh says, it’s more selective in selecting which experts to tap.

The model also conserves energy when it concerns reasoning, which is when the model is really charged to do something, through what’s called crucial value caching and compression. If you’re composing a story that needs research study, you can think of this approach as similar to being able to reference index cards with top-level summaries as you’re writing rather than needing to check out the entire report that’s been summed up, Singh describes.

What Singh is especially positive about is that DeepSeek’s models are mainly open source, minus the training data. With this approach, researchers can find out from each other faster, and it unlocks for smaller gamers to go into the industry. It also sets a precedent for more openness and accountability so that investors and consumers can be more critical of what resources enter into developing a model.

There is a double-edged sword to consider

” If we have actually demonstrated that these sophisticated AI abilities don’t need such massive resource usage, it will open a bit more breathing space for more sustainable infrastructure preparation,” Singh states. “This can also incentivize these established AI laboratories today, like Open AI, Anthropic, Google Gemini, towards developing more efficient algorithms and techniques and move beyond sort of a strength approach of merely including more information and calculating power onto these models.”

To be sure, there’s still uncertainty around DeepSeek. “We have actually done some digging on DeepSeek, however it’s difficult to find any concrete truths about the program’s energy usage,” Carlos Torres Diaz, head of power research at Rystad Energy, said in an e-mail.

If what the business claims about its energy usage is true, that could slash a data center’s total energy intake, Torres Diaz composes. And while huge tech companies have actually signed a flurry of deals to acquire renewable resource, soaring electrical power demand from information centers still risks siphoning restricted solar and wind resources from power grids. Reducing AI‘s electricity consumption “would in turn make more renewable resource available for other sectors, helping displace faster the usage of nonrenewable fuel sources,” according to Torres Diaz. “Overall, less power demand from any sector is beneficial for the worldwide energy transition as less fossil-fueled power generation would be required in the long-term.”

There is a double-edged sword to consider with more energy-efficient AI models. Microsoft CEO Satya Nadella composed on X about Jevons paradox, in which the more effective an innovation becomes, the most likely it is to be utilized. The environmental damage grows as an outcome of efficiency gains.

” The question is, gee, if we might drop the energy usage of AI by an element of 100 does that mean that there ‘d be 1,000 data suppliers coming in and stating, ‘Wow, this is excellent. We’re going to develop, develop, develop 1,000 times as much even as we prepared’?” states Philip Krein, research teacher of electrical and computer engineering at the University of Illinois Urbana-Champaign. “It’ll be an actually intriguing thing over the next 10 years to see.” Torres Diaz also stated that this problem makes it too early to revise power intake forecasts “substantially down.”

No matter how much electricity a data center uses, it is necessary to take a look at where that electrical power is coming from to understand how much pollution it develops. China still gets more than 60 percent of its electricity from coal, and another 3 percent originates from gas. The US also gets about 60 percent of its electrical power from fossil fuels, however a bulk of that originates from gas – which develops less co2 pollution when burned than coal.

To make things even worse, energy business are delaying the retirement of nonrenewable fuel source power plants in the US in part to satisfy increasing demand from information centers. Some are even planning to develop out new gas plants. Burning more fossil fuels undoubtedly causes more of the pollution that triggers environment change, as well as regional air contaminants that raise health dangers to close-by neighborhoods. Data centers likewise guzzle up a lot of water to keep hardware from overheating, which can lead to more tension in drought-prone regions.

Those are all issues that AI developers can minimize by restricting energy usage in general. Traditional information centers have had the ability to do so in the past. Despite work nearly tripling in between 2015 and 2019, power demand handled to stay reasonably flat during that time duration, according to Goldman Sachs Research. Data centers then grew much more power-hungry around 2020 with advances in AI. They consumed more than 4 percent of electrical power in the US in 2023, which might almost triple to around 12 percent by 2028, according to a December report from the Lawrence Berkeley National Laboratory. There’s more unpredictability about those sort of forecasts now, however calling any shots based on DeepSeek at this moment is still a shot in the dark.